- #Webscraper python sql how to#

- #Webscraper python sql pdf#

- #Webscraper python sql install#

- #Webscraper python sql full#

- #Webscraper python sql trial#

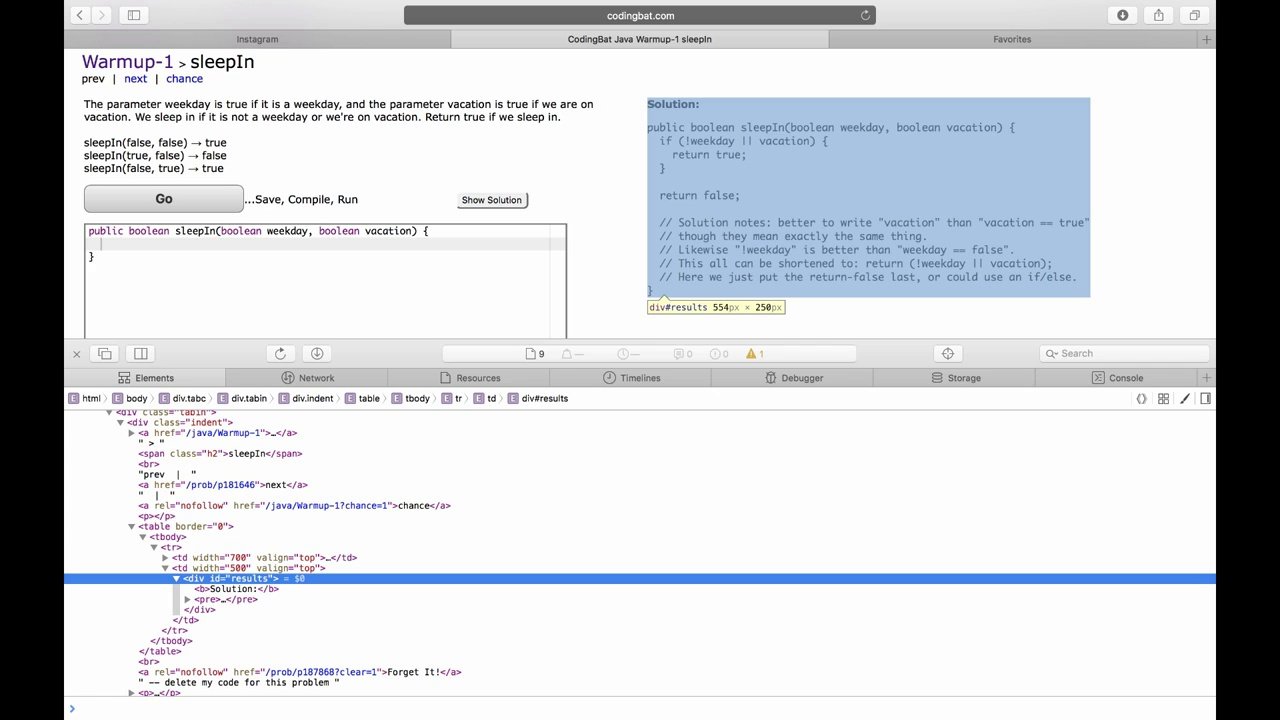

It is necessary to understand the HTML and CSS used for a web page. Once you installed the external library, you should use the sp_execute_external_script stored procedure and check its version.Īs shown below, its version is 0.3.2 for my SQL instance. Navigate to the bin folder (C:\Program Files\Microsoft SQL Server\MSSQL15.INST1\R_SERVICES\bin) in your SQL instance and run the following command.

#Webscraper python sql install#

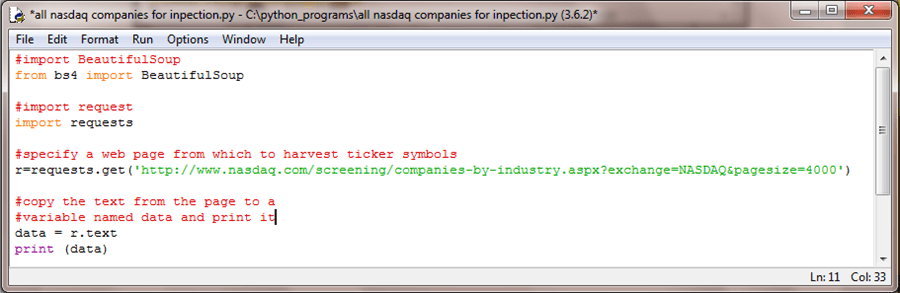

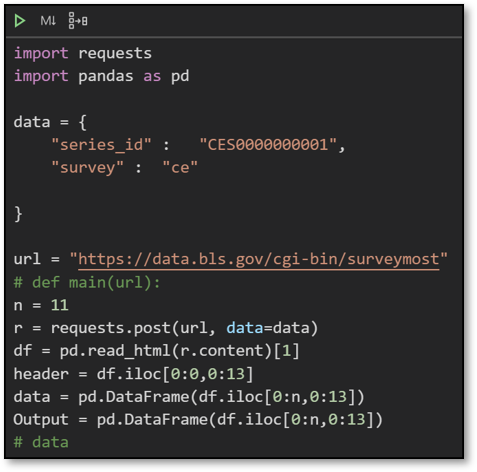

First, we need to install it using the Microsoft R client. In this article, we use the rvest library for web scrapping. Import RVest library for SQL Server R scripts In the previous articles for SQL Server R scripts, we explored the useful open-source libraries for adding new functionality in R. SQL Machine Learning language helps you in web scrapping with a small piece of code.

For this automation, usually, we depend on the developers to read the data from the website and insert it into SQL tables. You can manually copy data from a website however, if you regularly use it for your analysis, it requires automation. You can extract the information in a table, spreadsheet, CSV, JSON. Web Scraping is a process to extract the data from the websites and save it locally for further analysis. In the Power BI Desktop articles, we explored the way to fetch data directly from the URL. For example, users can browse SQLShack for all useful SQL Server related articles. All required information is available on the websites. The web is a significant source of data in the digital era. Please subscribe if you’d like to get an email notification whenever I post a new article.In this article, we will explore Web Scraping using R Scripts for SQL Machine Learning.

#Webscraper python sql full#

You can sign up for a membership to unlock full access to my articles, and have unlimited access to everything on Medium. If you enjoy this article and would like to Buy Me a Coffee, please click here.

#Webscraper python sql pdf#

#Webscraper python sql how to#

How to Convert Scanned Files to Searchable PDF Using Python and Pytesseract.Scrape Data from PDF Files Using Python and tabula-py.Scrape Data from PDF Files Using Python and PDFQuery.If you would like to continue exploring PDF scraping, please check out my other articles: Please keep in mind that when scraping data from PDF files, you should always carefully read the terms and conditions posted by the author and make sure you have permission to do so. With the help of python libraries, we can save time and money by automating this process of scraping data from PDF files and converting unstructured data into panel data. file = 'payroll_sample.pdf' df= tb.read_pdf(file, pages = '1', area = (0, 0, 300, 400), columns =, pandas_options=)Īs of today, companies still manually process PDF data. This time, we need to specify extra options to properly import the data. Like data in a structured format, we also use tb.read_pdf to import the unstructured data. There are a few steps we need to take to transform the data into panel format. On the right section, it has pay category, pay rate, hours and pay amount. On the left section, it has data in long format, including employee name, net amount, pay date and pay period.

In the following picture, we have an example of payroll data, which has mixed data structures.

For example, HR staff are likely to keep historical payroll data, which might not be created in tabular form. However, many data are only available in an unstructured format. To implement statistical analysis, data visualization and machine learning model, we need the data in tabular form (panel data). Next, we will explore something more interesting - PFD data in an unstructured format. file = 'state_population.pdf' data = tb.read_pdf(file, area = (300, 0, 600, 800), pages = '1') Scrape PDF Data in Unstructured Form tabula-py should be able to detect the rows and columns automatically. If the PDF page only includes the target table, then we don’t even need to specify the area.

#Webscraper python sql trial#

In practice, you will learn what values to use by trial and error. We just need to input the location of the tabular data in the PDF page by specifying the (top, left, bottom, right) coordinates of the area. Scraping PDF data in structured form is straightforward using tabula-py.

0 kommentar(er)

0 kommentar(er)